Running a Scrapy spider in a GCP cloud function

What you’ve probably tried but didn’t work

Scrapy docs explains how to Run Scrapy from a script. My first try was to simply copy that code, and run it in a Cloud Functions.

from ... import MySpider

from scrapy.crawler import CrawlerProcess

from scrapy.utils.project import get_project_settings

def my_cloud_function(event, context):

process = CrawlerProcess(settings={

'FEED_FORMAT': 'json',

'FEED_URI': 'items.json'

})

process.crawl(MySpider)

process.start()

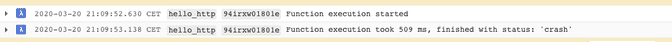

return 'ok'This worked, but surprisingly only on the first execution of the each function instance. Starting the second invocation, I started seeing in the logs:

Function execution took 509 ms, finished with status: 'crash'This is because my Cloud Function is not idempotent. Under the hood, Scrapy is using Twisted which sets up many global objects to power its events driven machinery.

When asked to run a Cloud Function, GCP will either create a new instance of your function (they call this a cold start), or reuse one of the instances it previously created. Therefore it’s very important for your function to be idempotent.

My Cloud Function here cannot run twice, and you can see this even on your local environment:

my_cloud_function(None, None)

my_cloud_function(None, None)The solution: wrapping Scrapy in its own child process

from multiprocessing import Process, Queue

from ... import MySpider

from scrapy.crawler import CrawlerProcess

from scrapy.utils.project import get_project_settings

def my_cloud_function(event, context):

def script(queue):

try:

settings = get_project_settings()

settings.setdict({

'LOG_LEVEL': 'ERROR',

'LOG_ENABLED': True,

})

process = CrawlerProcess(settings)

process.crawl(MySpider)

process.start()

queue.put(None)

except Exception as e:

queue.put(e)

queue = Queue()

# wrap the spider in a child process

main_process = Process(target=script, args=(queue,))

main_process.start() # start the process

main_process.join() # block until the spider finishes

result = queue.get() # check the process did not return an error

if result is not None:

raise result

return 'ok'Again you can check this works locally by calling your function twice in a row.